How do we know anything? Some say that we only know about external reality through perceptions, analysis and thoughts about these perceptions. But, can we know intuitively through an entirely different process? Is creativity and genius in science an internal understanding from another source of knowledge? (Internal discovery of mathematical principles as physical reality, rather than as an invention such as sculpture.) Or, is it based only upon thoughts about perceptions that are from sensory data? Is it possible that an expanded mental state such as self-observation alters perception?

How do we know anything? Some say that we only know about external reality through perceptions, analysis and thoughts about these perceptions. But, can we know intuitively through an entirely different process? Is creativity and genius in science an internal understanding from another source of knowledge? (Internal discovery of mathematical principles as physical reality, rather than as an invention such as sculpture.) Or, is it based only upon thoughts about perceptions that are from sensory data? Is it possible that an expanded mental state such as self-observation alters perception?

Current science only describes reality with proof from experiments in the external world and a mind analyzing perceptions, perhaps with mathematics—a form of thought. These perceptions then have to be validated by other people. Science is based on multiple minds agreeing that they have the same perception of an event. But, what is a mind?

Despite generations of neuroscience, including an enormous amount of brain imaging studies, there is no plausible explanation of subjective experience in the brain or what the mind is. Since most scientific advances have been in molecular biology and MRI imaging, the current unproven popular assumption is that molecules in the brain somehow create mind. Theories of how this might happen include computer properties of trillions of synapses, electrical brain waves, quantum computers  in neuronal mirotubules, and the physics of information (See posts for discussion of each theory – neuronal connections, brain waves, quantum effects, information – including the Limits of Current Neuroscience for the problems with each). Currently, there is no proof for any of these being mind or subjective experience. How can this one brain theory be true if mental events are shared by many brains at the same time, such as sharing the concepts of science and culture?

in neuronal mirotubules, and the physics of information (See posts for discussion of each theory – neuronal connections, brain waves, quantum effects, information – including the Limits of Current Neuroscience for the problems with each). Currently, there is no proof for any of these being mind or subjective experience. How can this one brain theory be true if mental events are shared by many brains at the same time, such as sharing the concepts of science and culture?

Although it is not clear what perceptions are, they are a critical way that we know, being derived from both external senses and internal sources.

Senses Gather Information

What is picked up by the senses? Out of the full range of physical forces impinging on an individual, a small narrow range is picked up by sensory receptors—electromagnetic waves, vibrations of force, gravity, and chemicals. These translate into the senses of sight, hearing, touch, balance and smell.

What is picked up by the senses? Out of the full range of physical forces impinging on an individual, a small narrow range is picked up by sensory receptors—electromagnetic waves, vibrations of force, gravity, and chemicals. These translate into the senses of sight, hearing, touch, balance and smell.

Sensory information from these receptors turns into neuronal information involving electrical signals, neurotransmitters, cytokines, synapses and brain waves. The higher brain regions mingle with this data and create scenes, scenarios and thoughts that we call perceptions. We naively assume that these perceptions are reality.

What we learn from the senses is, in fact, very limited.

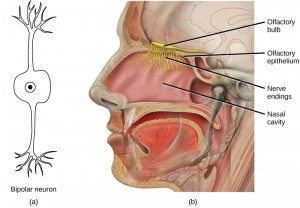

Smell Is Different in Each Individual

One important new finding is that each human being in some way receives different sets of sensory information that makes comparisons inexact. An important example was recently discovered for the sense of smell.

Of all the senses, the receptors of olfactory neurons are the most directly connected to the brain with a very short circuit to the cortex. This makes smell very important for many types of perceptions, including emotional circumstances and social decision-making. People often disagree on a smell or the impact of the smell and it has not been clear why.

Of all the senses, the receptors of olfactory neurons are the most directly connected to the brain with a very short circuit to the cortex. This makes smell very important for many types of perceptions, including emotional circumstances and social decision-making. People often disagree on a smell or the impact of the smell and it has not been clear why.

Recently, it was found that everyone perceives scents differently. Noses have 400 genes for receptors with 900,000 receptor variations. The smell receptors will be at least 30% different between any two. In fact, with all of these different combinations, no two people actually smell the same way.

Another recent study found that neurons themselves evaluate information without input from the brain. Neurons in the noses of mice react more strongly to threatening odors, even before the signal goes to the brain. Somehow the sensory neuron understands and reacts without a reflex.

Sight from Narrow Band of Frequencies

A major limitation of all sensory receptors is that they only respond to a very small narrow band of sensory data compared with what could be available. The range of human vision is an extremely small range of frequencies of the electromagnetic wavelength spectrum. Very different events would be “seen” if the bandwidth was wider. In fact, many animals do respond to different and wider bandwidths and understand different factors in the environment. Consider what information we would understand about the environment if we could perceive other ranges of electromagnetic waves like ultra violet, infrared, x rays and microwaves.

In peripheral vision there is one neuron connected to 100 rod cells. Therefore, much of the detail from peripheral vision is missed. In central vision, there is a better correlation of one neuron to each cone receptor. But, these cells also miss data because they must be constantly refreshed and they are constantly scanning. Much of the data is filtered out before it reaches the cortex.

Before the brain “sees” what is there, visual information is filtered by emotional brain centers for danger. With a dangerous object approaching, a flinch is triggered before any visual analysis is done in the traditional visual cortex. Remarkably, recent research shows that when a subject’s visual cortex was damaged so he could not see, the amygdala still picked up someone just turning to look at the subject.

Another recent study showed that the visual cortex of humans uses compression algorithms, like video protocols, to suppress redundant information and only sends the differences in images. Neurons subtract the past and current images and use only the differences. So, although we think we see the entire scene, our minds only pick up the differences that are occurring at each instant.

Also, a recent study shows that the eye sees colors differently based upon whether there is a black or white background. Sensory information is only meaningful when compared to something else. Both vision and touch senses embellish and fill in any missing information in a scene.

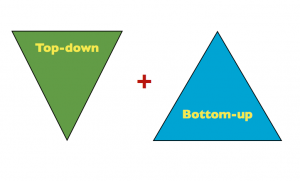

When the information does reach the cortex it is merged with information from all the other senses and with thoughts and emotions. There are, in fact, many more neurons sending information down from the cortex (top-down signals) than are coming up through incoming sensory data (bottom-up signals). This cortical top-down influence determines what we perceive even more than the sensory data.

All of our senses have many more top-down neurons manipulating the data than there is information flowing up. Whatever determines the top-down effects is determining the perceptions.

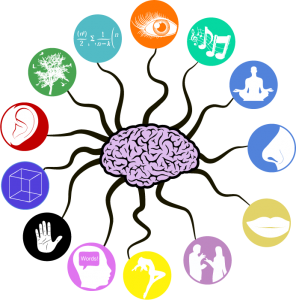

Multi Sensory Perceptions

Previous posts have described the unique effects of the multi sensory brain. For many years it was thought that most of the brain is composed of modules for individual senses and that these are integrated only in association areas. Recently, it has been demonstrated that most cortical neurons have multi sensory connections. This integration has many effects on perceptions

Previous posts have described the unique effects of the multi sensory brain. For many years it was thought that most of the brain is composed of modules for individual senses and that these are integrated only in association areas. Recently, it has been demonstrated that most cortical neurons have multi sensory connections. This integration has many effects on perceptions

One important effect is that visual information takes precedence over auditory. During speech, if the auditory information is different from the observed lip movement, the lip movement will be “heard”, instead of what is actually triggered by the auditory signals. Surprisingly, when musical experts evaluate a musical performance, if they can see and hear the performance, they will rate it better based on what they see. A totally different rating occurs if they can only listen to the  performance. Speech, in fact, can be perceived by sight, by hearing and, as with Helen Keller, by touching the lips, cheek and neck.

performance. Speech, in fact, can be perceived by sight, by hearing and, as with Helen Keller, by touching the lips, cheek and neck.

There are many other strange multi sensory effects on perception, like the sensation of a desk rising, if the subject’s hands are on the desk and the subject is watching a video of a waterfall. Another is an orange drink tasting like cherry, if it is colored red.

Perceptions, Cells and Molecules

Perceptions are critical for out ability to understand and make decisions, but also correlate with molecular neuroplastic alterations in wide ranging neuronal circuits. Mental events, such as perceptions, trigger many varied simultaneous mechanisms such as new neurotransmitters, receptors, dendrite spines, microtubule and actin scaffolding, myosin motors, and many more. (see post on neuroplasticity). Perceptions correlate with brain events in at least six orders of magnitude at the same instant—molecules, neurons, brain circuits, and social interactions. A mental event involving people simultaneously triggers neuronal brain circuits, bodily organs, movement and genetic alterations in individual immune cells. Important examples of perceptions interacting in  this total range of orders of magnitude have been recently described.

this total range of orders of magnitude have been recently described.

Perceptions of social interactions have unusual effects on networks of genes deep inside neurons and immune cells. A recent study showed that isolation causes an increase in inflammation factors and a decrease in the defense against viruses. However, it is not the physical reality of the isolation, but rather the perception of the isolation that affects the immune factors. When someone is in prison, negative immune responses do not occur if he or she has a close mental connection with someone.

Happiness from altruistic behaviors affects immune cells’ genetic networks. Happiness derived from many activities has no effect on immune factors. But, happiness derived from community service decreases inflammation and increases viral defense. It is the type of happiness, the meaning or perception of the happiness, that causes the genetic networks to respond.

Top-Down Versus Bottom-Up

In all studies of perception, sensory information impacts perception less than the neurons coming from the cortex.

In all studies of perception, sensory information impacts perception less than the neurons coming from the cortex.

Top-down effects on perceptions include a picture of a bright light causing pupils of the eye to react as if it was real physical brightness. Another occurs when an abstract concept of a good deed is considered and the room appears brighter. Likewise, recalling a negative deed has the opposite effect.

Other experiments show that words related to food appear brighter and clearer if the subject is hungry. Coins are viewed as larger with children who are poor. Good hitters in baseball see a larger ball. Hunters who are holding a gun are more likely to think that others are holding a gun even when they aren’t. Large people will judge the absolute measurement of a doorway as more narrow than others will. It’s the same for hunters if they’re trying to decrease their target acquisition time.

Other experiments show that words related to food appear brighter and clearer if the subject is hungry. Coins are viewed as larger with children who are poor. Good hitters in baseball see a larger ball. Hunters who are holding a gun are more likely to think that others are holding a gun even when they aren’t. Large people will judge the absolute measurement of a doorway as more narrow than others will. It’s the same for hunters if they’re trying to decrease their target acquisition time.

Top-down effects occur with words and thoughts that alter sensory information. “She kicked the ball” stimulates the leg brain regions related to kicking. When someone says “wet behind the ears”, the metaphor triggers brain regions of the sense of wetness and the ears, as well as the language centers.

All of these involve top-down cortical neurons influencing sensory neurons.

Unconscious Mind, Expectation and Perceptions

Subjects are told to watch a stage with specific action. While they study the action, a man in a gorilla suit walks across the stage, bows and then leaves. No one notices the gorilla. Why? They are focusing intensely on the other action and do not expect a gorilla. In another study radiologists examine patients’ MRI film for illness. They never see the gorilla implanted in the film. Radiologists don’t expect a gorilla in a brain imaging film, either.

Subjects are told to watch a stage with specific action. While they study the action, a man in a gorilla suit walks across the stage, bows and then leaves. No one notices the gorilla. Why? They are focusing intensely on the other action and do not expect a gorilla. In another study radiologists examine patients’ MRI film for illness. They never see the gorilla implanted in the film. Radiologists don’t expect a gorilla in a brain imaging film, either.

People only see what they expect; they see what is new and useful based on what they expect from previous experiences. We base decisions on previous experiences by forming a pattern or scenario. Social perceptions are based on expecting people to act as our family or close friends have in the past.

Much decision-making is unconscious and how it affects perceptions is not clear. One study found that a good typist doesn’t know what their fingers are doing. The typing is unconscious and is learned in the motor memory regions of the brain. Another recent study shows that if we look at patterns that have hidden objects embedded in them, we will consciously see some of the objects, and unconsciously see others. The subconscious understands the meaning of the hidden objects, while we are not consciously aware of them.

Expectations that affect perception are conscious or unconscious—affected by desires, memories, pain, drugs and medical conditions.

Conscious Mind and Perception

Do we know what determines the top-down cortical influences of perceptions? While many influences are unconscious can anything be  learned about perceptions through study of conscious mental behavior? Does conscious mental focus determine perceptions?

learned about perceptions through study of conscious mental behavior? Does conscious mental focus determine perceptions?

Conscious focused attention is actually rare in ordinary human life. Most of the time people are buffeted by random influences and suggestions from the environment, social interactions, and the 24/7 media assault. We, often, respond unconsciously to these constant suggestions and demands.

One type of conscious activity is imagining a scene before looking at it. This conscious act alters the perception. The same occurs when feeling an emotion first, before looking at the scene. It, also, alters the perception.

A powerful conscious mental activity is the mental focus in meditation. Research shows that this self-directed mental activity has dramatic affects on inflammatory and viral factors, mitochondrial energy, cardiac disease, hypertension, posttraumatic stress, pain and fearfulness. (See the post Meditation Update 2013 for the many beneficial effects that occur with meditation). Meditation, also, alters perceptions.

Self Observation Alters Perception

In meditation, a part of the mind becomes the witness or the self-observer of mental events—sometimes referred to as mindfulness. Observing the mind also occurs in psychotherapy. In both, by focusing the mind on self-observation, an ability to watch mental events as they occur, without the usual emotional reaction, is strengthened.

In meditation, a part of the mind becomes the witness or the self-observer of mental events—sometimes referred to as mindfulness. Observing the mind also occurs in psychotherapy. In both, by focusing the mind on self-observation, an ability to watch mental events as they occur, without the usual emotional reaction, is strengthened.

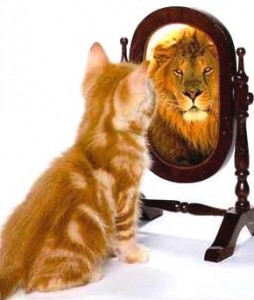

With growing power to step back from the emotional impacts of thoughts and experiences, the self-observation alters perception. The perception of a fearful situation becomes clearer and less severe. Anxiety changes to calm. The world can appear brighter. Effective self-observation is equivalent to “living in the present” where the world appears different, without the impact of thoughts about the past and future. In this state nature can be experienced more directly and totally new creative observations occur. In advanced states of meditation alterations of perceptions include feelings of awe, bliss and oneness with the universe.

Self-observation increases inner experience and at the same time alters perceptions. In this expanded mental state, is the top-down direction coming from an internal mental source that is more than sensory data and thoughts about sensory data?

Is this deeper experience of mind an intuitive source of knowledge that alters perceptions while simultaneously affecting immune and physiological functions seen with meditation? Is this expanded inner state similar to internal mental mathematical discovery of physical reality? Does the expanded inner realm trigger expanded physiological functions and altered perceptions?